I just want to get something of my chest that is bothering me for quite some time now. It?s not going to be a rant of some sort, but merely a couple of observations for which I couldn’t find the right words to describe things up until this point. In short: this post is way overdue :-).

What’s the major malfunction with those old, classic Christmas lights? We’ve all experienced it at some point. When one goes out, all the others go out as well. This is due to the fact that these lights are wired in series. The difference compared with today’s Christmas lights is that every bulb has a shunt, which basically prevents this kind of failure caused by one or more lamps. Enough about the Christmas lights for now. Where am I going with this? Back in enterprise IT, I’m seeing the same kind of failures as with those classic, old Christmas lights.

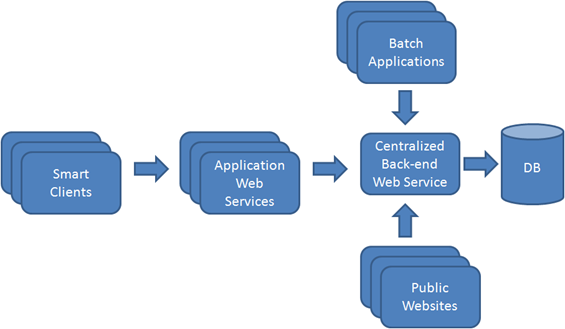

The diagram below shows a classic RPC style architecture, much like those classic, old Christmas lights.

This is all fine and dandy as long as every part of the chain runs without too much hassle. But what happens if for some reason the centralized back-end web service goes down (light bulb goes out). This means that every smart client, website and batch application that uses this web service gets affected by this, like some sort of chain reaction. Parts of these client applications will no longer function correctly or they might even go down entirely. Same thing happens when the database of the centralized back-end web service goes down or any other external system that it depends on. When being confronted with this kind of architecture, how would one go about preventing this doomsday scenario to happen?

Suppose you?re a developer that has to work on the centralized back-end web service. This is usually a complex system as it obviously has to provide features for all kinds of applications. When this centralized back-end web service also has to deal with and depend on other external systems that might expose some unexpected behavior, how could one prevent the sky from falling down when things go awry in production?

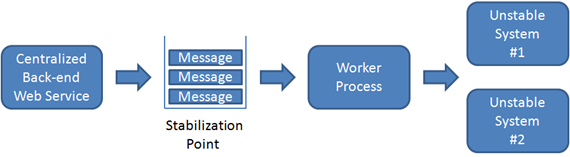

Well for starters, you could start building in some stabilization points. Suppose for some reason, the centralized web service needs to incorporate some functionality offered by a highly expensive, super enterprise system that for some reason behaves very unstable and unpredictably on every full moon (expensive enterprise software not behaving correctly sounds ridiculous, but bare with me 😉 ). For example, we could use a message queue as a stabilization point.

This means that we put a message on a queue that is processed by some sort of worker process or service that does the actual communication with the misbehaving system. When the external system goes down, the message is either left on the message queue or put on an error queue for later processing when the external system comes back up again. There are some other things you need to think about like idempotent messaging, consistency, message persistence when the server goes down, etc. ? . But if one of these dependencies goes down, the centralized back-end web service is still up-and-running which means that the systems that depend on its functionality can also continue to serve their users as they are able to keep doing their work.

Earlier this week I overheard this conversation that somewhat amazed me. I changed the names of the persons involved as well as the exact words used in order to protect the guilty.

George: We want to incorporate a message queue in order to guarantee stability and integrity between several non-transactional systems that our system depends on. It will also improve performance as these systems behave very slow at times and become unstable under pressure. This also gives us the opportunity to root out some major points friction that our end-users are experiencing right now.

Stan: But this means that the end-users are not completely sure if their actions are indeed fully carried out by the system.

George: End-users can always check the current state of affairs in their applications. If something goes wrong, their request is not lost and things will get fixed automatically later on as soon as the cause of the error has been fixed.

Stan: I don?t think that?s a very good idea. End-users have to wait until everything is processed synchronously, even if that means that they?ll need to wait for a long time. And if one of the external systems goes down, they should stop sending in new requests. Everything should come to a halt. They just have to stop doing what they are doing.

George: This means that because you lose the original request, some external systems might be set up correctly while others are not. Then someone has to manually fix these issues.

Stan: Then so be it!

For starters, I was shocked by this conversation. This is just insane. Everything should come to a halt? Think about this scenario for a while: suppose you?re finding yourself in a grocery store with a cart full of food, drinks and other stuff. You come at the cash register where the lady kindly says ?Can you put everything back on the shelves please? There are some issues with the cash register software and we are instructed to stop scanning items and serve customers until these issues are fixed. Can you come back tomorrow please??. Uhm, no! How much money do you think this is going to cost compared to the system that makes use of stabilization points? An end-user that is able to keep doing its work, whether the entire production system is down or not has tremendous business value.

I?m not saying that message queues are a silver bullet. I?m just using these as an example. As always, there is a time and place for using them. There are other things a developer can incorporate in order to increase the stability of the system he?s working on, like the circuit breaker pattern. I?m also not saying that every system should be built using every stabilization point one can think of. This become a business decision depending on the kind of solution. As usual, it depends.

But the point that I?m trying to make here is that we should stop putting software systems into production and just hoping for the best. That?s just wishful thinking. Software systems are going to behave badly and at some point they will go down. It?s just a matter of when this is going to happen and how much damage this is going to make.

The first step to take is awareness. I encourage you to pick up this book titled ?Release It!?, written by Michael Nygard. This book is all about designing software that can survive this though environment called production. I can only hope that Stan picks up a copy as well along with some common sense.

Till next time.

Sorry, but this article makes no sense. All you are saying is that instead of the WS contacting individual systems itself, to fire a message in a queue that is monitored by another service that contacts the individual systems. You can achieve the same thing by having the WS fire a thread to do the work. You mention nothing about synchronous or asynchronous communication with the actual client that requested the service in the first place.

Interesting post. I think it would be fair to say that the Stabilization Points still do not address your example of if the Database or Centralized Back-end Web Service fails. Although messages from the client would be placed in the queue, any messages being sent from the web service back to the client will be lost at the time of failure. Depending on what type of load you are queuing up, this can be quite damaging to business. To help protect against this failure, you may implement database failover and load balancing on your web servers in combination with the stabilization points.

One thing that would concern me is that if something did go wrong that isn’t correctly within seconds. We can assume the web users are very impatient and if their page is not responsive with seconds of a request, they will either start clicking refresh rapidly which could cause major problems, such as charging their credit card mutliple times, or they will just leave the site, meaning you lost all potential sales or business form that user. If something goes wrong with the backend, you would need to notify the user, but since the user’s request is in the queue which can not be processed and will wait until the system is up, the user will never know what happened. Of course, I am sure you could handle this with more fail-safes, but now you are adding more complexity to your hardware architecture as well as the software.

This was a good read and definitely gets you thinking about how to approach this common problem.

@Brian Lagunas

The stabilization point doesn’t make up for failing systems. It merely isolates your application from other systems failing or going into maintenance. I’m also not saying that just having a queue is enough. I’m just saying that there’s more to just building software. We should also strive to build software that can survive in a production environment. As for user notifications, there are several approaches for this and there isn’t one and true way. It all depends on how the business in question wants to deal with it. Maybe just sending an email to an end user or posting a Twitter message is enough to notify that the request is being processed. If something goes terribly wrong, we still have the original message so we could try again or we could send an e-mail to the user saying that we lost his request and that he should try again. When we don’t have these stabilization points, the user gets instant feedback, we lost the request and we still have to apologize. To make matters worse, the request could have been partially handled and partially rolled back (not every system supports atomic transactions).

Again, it all boils down how much money and effort your business is willing to spend to keep the software up and going at all times.

@Kostas

Using a seperate thread can help, but doesn’t add the required stability if the external system is down.