If you need to control the JSON output format of Ollama’s response, keep reading.

Ollama provides the capability to run various models locally on your own machine. Not only can I chat with a model directly using Ollama’s CLI tools, but Ollama serves up an API that I can use to talk to a currently running model.

I quickly learned that each model responds in its own format. Makes sense, because the model designers don’t know what output format you are looking for. But this becomes an issue when trying to parse the responses programmatically because you can’t depend on the output format. For example, some models don’t even respond with JSON by default.

The Few-Shot technique can help a great deal to shape the output, but still may not give us exact control over the response JSON. Fortunately, Ollama gives us an option to pass in a JSON schema with a request and it will conform the response to the schema. Note the response JSON comes stringified inside a single property that you’ll need to extract from the actual JSON response Ollama provides. For more on this, see my article on Chatting with Ollama’s API.

Here is the chat request I will send to Ollama’s API. I do token replacement on the obvious tokens and {{schema}} is one of those replacements.

{

"model": "{{modelName}}",

"messages": [

{

"role": "user",

"content": "{{code}}"

},

{

"role": "assistant",

"content": "{{systemPrompt}}"

}

],

"stream": false,

"format": "{{schema}}"

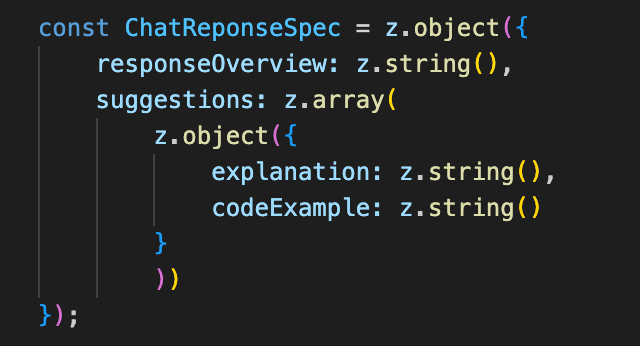

}So, how to get the schema I want to inject into this request? Here is an example using the ‘zod’ library which helps us get from an object to its schema.

import { z } from 'zod';

import { zodToJsonSchema } from 'zod-to-json-schema';

// define an object with 'zod'

const ChatReponseSpec = z.object({

responseOverview: z.string(),

suggestions: z.array(

z.object({

explanation: z.string(),

codeExample: z.string()

}

))

});

// extract that schema using'zodToJsonSchema'

const schema = zodToJsonSchema(ChatReponseSpec);

// now do the token replacement

chatRequest = chatRequest.replace("\"{{schema}}\"", JSON.stringify(schema));With that done (and after completing the other token replacements, I can now pass this to the Ollama API and get the JSON back in the format I requested regardless of which model I am using.

Formatting the chat reply JSON makes it so much easier to code against Ollama’s API because I can get all models to reply in the same format. Using zod is a simple approach to making this happen when combined with Ollama’s ‘format’ capability.

Happy coding!